When I was a kid in the early nineties there were no apps to remind you of things, so mostly you just hoped would remember. In particular, I hoped I would remember to check, on the futuristic date of August 29th, 1997, if Judgment Day had indeed occurred, as Terminator 2 said it would.

In the movie, that was the date a military artificial intelligence called Skynet became self-aware, according to time-travelers who had been there. The A.I.’s first decision, when it realized it was a thing, was to start a nuclear exchange in the hopes that it could eradicate human beings before they could unplug it.

I’m not sure what I ended up doing that day—today you can recall what happened on a given date by checking what emails you sent and received—but I don’t think I remembered what day it was supposed to be, and I am sure there was no nuclear holocaust.

Human beings are not great at predicting the future, but we have had a long-creeping suspicion that at some point overly smart computers will cause us huge problems. 1997 is now twenty years ago, and while our computers haven’t started any wars yet, they have begun to take our jobs.

We have robot cashiers, robot pilots, and robot stock traders. Automatic GPS-guided machinery has been planting and harvesting crops for years now. Algorithms are writing sports recaps, novels, and even some not-terrible poems. (Yes, it’s true, and it’s harder than you think to tell the difference.)

Real artificial intelligence, as in the kind that might be hard to distinguish from human intelligence, may not be that far off, and when it gets here, not even specialized, high-skill jobs are safe.

Nobody is really in a position to prevent this takeover. Market forces will bury all kinds of human-driven industries as they become obsolete. This isn’t a new thing—there are no telegram services any more, for instance, outside of the very specialized boutique hipster nostalgia market.

Even if we don’t want this to happen, we will choose it in many cases. I’m ashamed to say it, but I do slightly prefer the robot cashiers at my local Safeway, if only because the interactions I have with them seem less strained. However, there’s no reasoning with them if they don’t give you your change, so they still need to be supervised by a human being.

But even that job—supervisor of robot cashiers—is on its way out. Amazon is already testing a grocery store where you can just walk in, take what you like, and leave. A computerized sensor system (Skynet?) will remove the money from your account.

Millions of people in the US alone make a living doing some kind of driving, and robot-operated cars are already cruising the highways, and killing many fewer people while they do it. Apparently these robots are already so much better than humans at avoiding fatal accidents, that it may become illegal, in our lifetimes, to operate a vehicle if you are human.

Helping Them Help Us

All of this job takeover stuff is entirely separate from the other big problem with A.I., which is that we may lose control of our computers once they become smarter than us. This is actually a serious unsolved issue, not just a beloved sci-fi plot.

Programmers know that computers follow the instructions you give them, but what’s difficult is giving them instructions that create the results you want. Powerful A.I. is often compared to the genie in so many fables: it grants what you ask it, but so literally and forcefully that you wish you never found the lamp to begin with.

Author Yuval Harari gives a classic example in this talk. Let’s imagine the first superintelligent A.I. is given the harmless-sounding task of calculating as many digits of Pi as possible. It quickly recognizes that human beings are using energy to run things like coffeemakers and hot tubs—energy that could be harnessed to calculate more digits of Pi. The logical procedure is therefore to subvert and destroy humankind, eliminating its interference in the computer’s assigned goal. And just like that, we’re living in a James Cameron movie.

You might think our computer scientists would account for that possibility. Of course we will account for the possibilities that occur to us. But unless we can outthink the superintelligence at every stage as we develop it—which would defeat much of the purpose—we can’t predict how it will interpret our instructions, especially if it figures out how to lie to us.

However, this problem isn’t as imminent as the robot takeover of the job market. Basically, from here on in, there will be an increasing number of people for whom there is no work they can do better than—or cheaper than—a robot.

Of course, if computers and robots were doing most of the work, maybe we wouldn’t need jobs. Immense wealth could be produced with very little human work. You can imagine, if robots were doing half of everybody’s job, that we could simply work half as much, pay a “robot tax” to share the cost of their development and maintenance, and society would be just as prosperous.

Theoretically, we could also do this if the robots did all the work. The same work is getting done, only by machines that don’t get tired or disgruntled. So why would we need jobs at all? Well, without slipping into a political rabbit-hole, let’s just say that it probably wouldn’t work out that way without a drastically different economic system. It’s easy to imagine that the only people with any wealth would be the small number of trillionaires who supply the robots that do every other job. Another sci-fi movie plot.

The Next Hottest Thing: Being Human

This is all very scary, but we can take solace in a couple of certainties:

Firstly, we have no idea what will really happen. Beyond the imminence of self-driving cars and robot-supervised grocery stores, we can’t be certain it will be catastrophic.

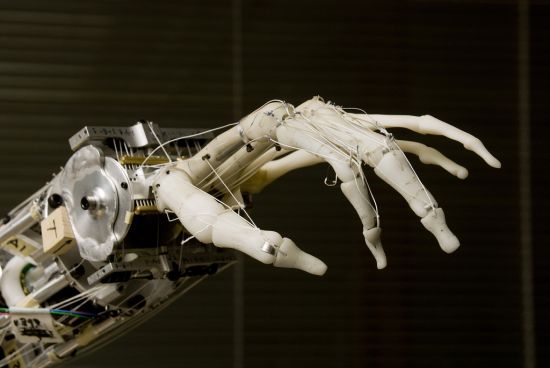

The other one is that there are some things A.I.s and robots can do better than other things, and they probably can’t provide everything we value. At the moment, we can barely build robots that can walk down a hallway and turn a doorknob, let alone write a knock-knock joke.

Can a robot replace your taxi driver? Yes, at least the driving part. Can a robot replace your therapist? Not any time soon. Can a robot replace your dog? Never!

Can a robot replace your barista, or your bartender? Not really. Automated machines can dispense drinks just fine, but drinks aren’t all that’s being supplied. It’s hard to imagine a robot supplying the sense that you are being attended to by another human being who cares whether you’re happy with what you are served.

If we can better appreciate the subtle qualities humans (and animals) offer that robots can’t, we may not end up with a dystopia on our hands. As more and more of our needs are met by machines, demand might shift to qualities that can, for now or forever, only be produced and delivered by humans: empathy, understanding, wisdom, solidarity, humor, real eye contact, little nuances in language and craft, even simple feelings like that of being in a room with another person.

Once robots are fulfilling our current fixation on things and conveniences, we might finally recognize the role of thousands of subtle human qualities that we all value, but never talk about when we talk about the economy. (Not that we need to wait for Judgment Day before we start exploring those “markets.”)

Nobody knows where we’re headed, but it’s interesting to think about how different society would be if people were no longer valued primarily for their ability to supply material goods and services. Productivity will remain the measure of a machine’s worth, but talking about human being in those terms might become crass or taboo.

If the robots take over all the utilitarian stuff, we might need to become a society of carers, understanders, expressers and connectors, the way we are today a society of managers, designers, laborers and servers. And maybe we’re better suited for that anyway.

***

I'm David, and Raptitude is a blog about getting better at being human -- things we can do to improve our lives today.

I'm David, and Raptitude is a blog about getting better at being human -- things we can do to improve our lives today.

My 2 cents – I’m pretty sure that a sufficiently advanced robot will be easily able to “fake” being a human, all the emotional stuff included. However if we get there, the robot apocalypse might solve itself the same way. All the “humane” traits like compassion, desire for peace, love, etc have arisen from a sufficiently advanced brain that is hardwired to require social interaction. Why shouldn’t the robots possess these same qualities? In fact, I think it’s more likely that robots will become a sort of our “caretakers”, purely out of compassionate grounds. They will rule us, but kindly, like a zookeeper taking care of its beloved animals, making sure that their lives are comfortable, they don’t hurt themselves and aren’t hurt by other things beyond their control and understanding. And eventually we might even get the option to become robots ourselves – to upload our minds to a robotic body and live on forever as a far, far more advanced species.

But then again, I’m really not an expert on these things, and I know of several experts who are currently really concerned about the issues outlined in the article, so better listen to them. :)

If we reach a point where computers are actually sentient, then they will be included in the discussion about values compassion, etc. But that’s quite a ways away. There are computer experts who laugh at the idea of AI taking over the world, because we can barely build a robot that can open an unlocked door

I think this looks over some things.

One is that of human as homo faber. For many people the capacity to become involved in some work, to get into the flow and strive towards a goal is something that makes lives inherently meaningful. I know of many people who make enough on unemployment to live comfortably but prefer to work.

Second, not all human beings are interested (or capable) of making a living out of being compassionate, empathic, and connected with other people. Some people have low agreeableness, are highly introverted, etc.

Third, the perspective offered at the end of the article implies a shift to an economy that places a value on stuff like eye contact, empathy etc – which it currently doesn’t. I don’t think the advancing robotic age will provide the impetus to change, since the reason we are making them is because of their efficiency and low cost. If we already did value human touch and contact in our interaction, it wouldn’t occur to us to build robots.

I think I was pretty clear that the vision at the end of this post is just one possible way we will adapt to the impending change in how work gets done.

Meaning is always a huge issue for us, and our need for it will become more obvious as robots put more people out of work. People derive different amounts of meaning from their work, depending on what it is. But people people work to pay the bills.

If we already did value human touch and contact in our interaction, it wouldn’t occur to us to build robots.

We can’t value more than one thing? Regardless of our need for human contact, we’re always looking to get more done with less effort, and we’ve been building machines to help us do that since the stone age.

Absolutely. The problem is that one of our main concerns (aside from productivity and making money) is comfort… We all long for improvements that will make our lives more comfortable and bearable, but we then complain that our jobs are being taken away and we have nothing left to do.

The same goes for the environment: most of us would like to save the planet, but most changes that need to be made appear so uncomfortable. We struggle with the idea that we need to compromise, or even renounce some of our improvements, in order to achieve balance.

Humanity’s unwillingness to backtrack out of certain technological advancements that might not be for the best worries me more than our (future) robot overlords.

Yeah that is the driving factor here: our moment-to-moment desires and how we field them. That’s why we can’t reconcile our desire to drive everywhere with our desire to not ruin the planet. It does come down to individual ethics, which comes down to how we respond to discomfort in the moment.

I do think there’s a point where there’s such a thing as too much tech. At a certain point you just need to let flawed humans exist and complete work without the aid of AI. I would be very interested to see the financial implications of everyone being out of work because the robots do everything (unlikely, but possible).

Definitely there is. It’s just hard to see how we can move backwards, or even slow down. I know that I feel a pretty strong desire these days to “de-tech” in certain ways… to use manual tools, to reduce screen time, etc.

Personally, I avoid the self-checkout machines. After all, they do not come to my work to do my job! Seriously, I know it is all inevitable but I like to slow them down. I still do my banking “by hand” and in person….even though the tellers are trained to and get bonuses to get me to do it online. I like having interactions with people every day. I do engage with cashiers and consider some of them to be acquaintance by this time. Of course, I live in the South where curtesy is still part of our charm. I’ll go with the human every chance. I savor those smiles. Guess what I do for a living? I am a professional artist and I know that there is a computer out there better than me but, just for now, I’ll keep trying to be Van Gogh!

That’s interesting… I prefer genuine human interactions too, but I find when they are mediated by a commercial structure around them (as they are for bank tellers and cashiers) then they’re often not the kind of authentic human interaction I find very rewarding. This varies a lot obviously… the smaller the business, the more space there is for genuine interaction.

Luckily art is going to be one of the most resilient industries to computer takeover. Even if computers can produce a Jackson Pollock clone, nobody will buy it if they know a computer did it.

This reminds me of a book with a very interesting idea of how our economy could work in the future. I read it at the end of 2013 and I kind of like the idea of being credited for all the contributions I make. Who Owns The Future? by Jaron Lanier

Will add it to the “to check out” list, thanks Michele.

Why do you assume that they won’t be able to be like animals and humans? When you break it down, humans and animals are just very complex biological machines. Sure there is a difference in how we operate: hormones, electrical potentials, neurotransmitters, etc. But the gist is the same. Input goes in, gets processed, output goes out. Our brains are just a very (very) complex neural network.

So given enough technological advancement robots will be indistinguishable from humans. The more interesting question that arises here is whether or not we will consider them as conscious beings and what that means for consciousness itself. If they’re so complex that they can “fake” feelings, jokes, laughter, etc… Who are we to say those things will not be genuine? What is genuine and what is fake? What determines this?

The only one that is 100% conscious for sure is yourself. Other people could just be squishy robots faking it, right? Those will be interesting times.

I don’t assume that AI will never pass the Turing test, or go further than that even. But I think we are profoundly different than machines. Obviously the process in creating one would be entirely different than the evolutionary process that created us, and I can’t see how we’d end up with something indistinguishably similar.

We can make computers that are unbeatable in chess, but that’s not even close to making one that can review a movie, and that’s hardly the most nuanced thing humans do. I think we underestimate the subtleties inherent in what it’s like to be (and be with) a human. There’s a great episode of Black Mirror that explores this: a mail order service can build a replica of a lost loved one, and download all their memories, speech patterns, and so on. On the surface it’s convincing. But after a while it seems grotesque, because it’s just not quite human and it’s obvious.

This is inevitable, isn’t it? The day when robots take up everyday jobs of human beings. Less errors, less accidents, less cost and more efficiency. We can’t prohibit it. But we can, as Sal Khan said in his talk, invert the pyramid. We can build a society where jobs which involve dynamic thinking and creativity increase, and the number of ‘manufacturing-line’ jobs decrease. That way, humankind will still be in control of the robots. And we can live a much better (and productive) life by paying ‘robot tax’.

It’s inevitable that a change will happen but it’s hard to know how it will go down. I hope we take a really conscious role in how it happens though. I like Sal Khans’ vision.

Whenever this subject arises, I think about my own profession, dentistry. The amount of fine eye hand coordination and variation in movement is incredulous. I watch videos online of robots barely being able to pour water into a glass and handing it to a human and I agree, it is going to be a while before we get even close to allowing them near our mouths (yikes). I also feel that it is a delicate interpersonal interaction that occurs when one person is operating on another conscious person’s body with sharp instruments. People need to be able to have some empathetic creature on the controlling end of the drill that can and will respond to the patients feelings. Will robots get there? Who knows? We do need/like to be attended to by others . I feel like that is my primary job. It takes a great deal of listening and making sure others feel heard. Still waiting for the the great listening robot. Therapists, you are safe too.

> I also feel that it is a delicate interpersonal interaction that occurs when one person is operating on another conscious person’s body with sharp instruments.

Yes! The service is more than just the moving of instruments. Even if we can produce data that shows robots are safer (as in the case of cars) it’s a hard sell to get people to put their safety in the hands of an algorithm instead of a trusted human being.

People are more nuanced in our interactions (think of the “warmth” that we feel when sharing eye contact with a stranger when we both see something amusing or touching in a restaurant or on the street). Awareness–as in a robot picking up on the same social cues and acknowledging this by displaying “surface” or mimicked signs of acknowledgement or appreciation–is not qualitatively the same as the interaction between the two human “agents.”

Is it possible that there truely is a non-corporeal aspect to living organisms (our spiritual “essence”) that can’t be replicated in material form, no matter what degree of machine “awareness” is involved?

The new Netflix film with Robert Redford (The Discovery) due out at the end of March promises to add to the conversation around the existence of “soul” and agency. The fictional “discovery” in question is apparently the scientific confirmation that our human “essence” survives death. Mass suicides are one of the imagined unintended consequences, as people choose to escape dealing with their life situations by choosing to “move on.” Might intelligent robots also make such a choice, especially given that their “lot in life” is likely to be one of endless drudgery? The qualitative nature of self existence is profoundly significant, especially if it turns out that our sense of “being” is rooted in something more than just self reflexive circuits–be they biological or mechanical.

That is a good question, and I’m not sure. I don’t believe in souls or any other religiously-derived notions of additional layers of experience, and I do think that any other layers of experience/existence that matter to humans are by definition discoverable by humans. But that doesn’t mean we can capture all the nuances that are there, or even will want to, with robots. I guess that’s what I’m really getting at in this post… that our experience is mostly about nuances that we struggle to even describe, and if we can’t even describe them how do we expect our robots to capture them?

Having read your blog for a while and noted the value you put on human interaction, I was surprised to read that you prefer the “robot cashiers” at your local Safeway because “interactions with them feel less strained.” In my own experience, the human interactions with my local grocery cashiers is a small bright spot that provides a small sense of community, even for those living in an urban environment. I’d urge you to approach your next interaction with a (human) cashier with mindfulness for those “qualities that can, for now or forever, only be produced and delivered by humans: empathy, understanding, wisdom, solidarity, humor, real eye contact, [and] little nuances in language and craft…” Everyday service interactions are little opportunities to acknowledge our common humanity.

I was mostly joking. I do have good interactions with many people on the other side of a counter regularly. A busy grocery store checkout is a particularly robotic social situation though. The employees are constrained in what they must and can’t say by their store policies, they don’t get to choose whether they talk to you, there is literally a conveyor belt advancing the interaction, there are always people behind you, etc. I do like short interactions I have with shop owners, counter staff at smaller places, and other people who are currently at work, but there was something about the atmosphere at my local Safeway that made genuine connections rare, at least for me. It is the only grocery store in the most densely populated area in the city. Having worked in grocery stores a lot in my past may have contributed to a negative association, haha.

Beautiful piece, David. You should be on a panel to help decide the humanistic guidelines and limits of people interacting with advanced AI.

If the accuracy of our predictions from the past are any indication, someone from 50 or 100 years from now may want to tell us something like, “Your future is completely different than what you thought it might be, with many benefits and problems you never imagined. Most of both really aren’t the result of technology; they’re due to changes in people’s outlooks and opinions. And we’re still predicting doom from budding new creations that we envision one day getting out of control.”

Thanks Ron. I don’t think I’m qualified for any kind of serious panel though… these are just musings but there are real experts on the topic.

Whenever I think about imagining the future in 50 or 100 years, I just try to remember how incapable we are of that level of prediction. Today the internet is this prominent social and cultural force, but 30 years ago it would be hard to even describe it, let alone predict it.

The vision at the end is a lovely thought, actually! I’d like to think that clarity could come about as we focus on our spirits rather than than our “stuff”.

Well we do know that consumerism was not always a prominent force in human culture, so there’s no reason to believe it is a permanent fixation. I wonder if the advance of bots will be a catalyst in moving us past it.

This has been my personal favorite dilemma, even since I started meditating. I approached it more from self awareness for humans vs self awareness for machines perspective and how we have focused too much on AI, which is not going to do much when it comes to improving our real quality of life (http://www.abhijeetsreflections.com/2016/08/21/next-big-thing/).

I like your perspective. Its more optimistic, and plausible.

I do hope awareness is the next big thing. Without quite planning it this way, making it a bigger thing has become my career.

May be its my bias towards spending my days in a mindful way, and hence the likelihood of meeting such people. I hear more and more people talking about mindfulness, compassion, happiness.

I myself woke up about 2 years ago, and realized how negligent I was about my own life. Reading blogs like yours, helped me find that interest in what appeared to be more subtle aspects of life (now it has become more core aspects of life).

I recently visited my country of origin, India for 3 weeks, and one of the things I noticed was ads about “Inner Engineering”, “Yoga” everywhere. It is interesting because historically Indian culture had access to these practices for centuries (may be thousand+ years). But growing up in India, I never quite got a hang of self awareness. It was only in my mid 20s, living in Seattle, and comtemplating about my life that I woke up.

I love taking mind-bending mental journeys like this. But it is also a horror movie to me and utterly terrifying to contemplate. I don’t have faith that society will share the spoils when this day comes. The work week hasn’t changed in the face of massive productivity increases thanks to technology, so why would we think that outcome could exist in the future? I suppose if 90% of the people can’t work, it is the only way you have an economy, but still, I have faith that whatever system arises in response will grossly favor the few “machine owners.” I shudder to think of what it must have been like to live 100 years ago with no sanitation and people just being able to beat their wives and kids. Yet if I had a chance to go back 100 years, or forward 100 years, I know which direction is more enticing to me. Not sure what that says about my psyche!

Yeah, consumerism is kind of the hole in the canoe… no matter how much we increase productivity, if consumption simply increases along with it then most of us never really get wealthier. However, consumerism hasn’t been around for all that long and there’s no reason to believe it’s the permanent state of things, especially knowing all of the ways in which it is unsustainable. I wonder if 100 years consumerism will have burned itself out. I think if I had to go forward or backward 100 years I’d take the gamble and go forward.

This is such a freaky subject! Exciting, but freaky. What to do with the advancement of tech? Move backwards. Move outside to a simpler life. Start RUNNING! Running is the pure sport of anti-tech. Get outside and away from all the madness while you still can! Ha.

I am hoping we’ll see market forces support a kind of low-tech movement. People voluntarily de-technologizing their lives and adopting more non-electronic activities. A good run sounds great right about now.

Being insider to the industry we are already seeing the replacement (Automation) of humans at unbelievable pace.

Most impacted will be countries which have been handling outsourced jobs (mostly developing countries). And this I am saying because, this will be catastrophic considering most of these countries don’t have strong social security system like developed countries, very ill prepared and also failed to anticipate whats coming.

As you pointed we may not stop this, but certainly we can prepare for the days coming, world fast becoming profit oriented and comfort oriented. It’s time humans slow down the desire of “Always want more”.

Social safety nets are going to become a bigger part of the political picture as automation takes more jobs. There’s no rule that says there should be just as many ways to get paid as there are people, yet somehow we think unemployment is an avoidable problem. That will become more and more obviously false, and we’ll have to start talking about basic income programs and other forms of social safety nets.

GREAT ESSAY DAVID! You truly framed this discussion in a perspective that not many other people are talking about. Which, of course, is why I continue to look forward to your thoughts. You have a refreshingly positive outlook that seems to be greatly lacking in today’s society. Thanks for providing that bastion of rational sanity with a humanist perspective. We ALL need to slow down and get back to being human.

Thanks Chris. Humans are great, at least when we’re doing the stuff robots can’t do.

“Can a robot replace your therapist? Not any time soon. Can a robot replace your dog? Never!”

ELIZA, the Rogerian therapist program, was one of the earliest successes of A.I. in the sixties. Its author, Joseph Weizenbaum, wrote it with some elementary natural language processing algorithms to parody more earnest efforts at MIT, and was horrified to find it taken seriously by the engineering intelligentsia (a holocaust survivor, Weizenbaum had more reason than most to question the intellectual vanity of progress).

Since ELIZA used Rogers’ “active listening” technique of repeating back to the client large parts of what he or she just said/typed, the program essentially functioned like a mirror, an interactive journal. It’s still bundled in the Unix text editor, Emacs. YouTube has an example of a session with an online version:

https://www.youtube.com/watch?v=6bcirXS6jiU

As for the dog, google the Sony AIBO, which has hugely popular in Japan for a few years.

I remember Eliza! I had a version on my Apple IIe, which proves how basic it is compared to a real human interaction.

I have seen AIBO, but I’m not sure if anyone would seriously argue that it can offer the same connection or affection as a dog. It’s just a dog-shaped robot.

David, I’m about to pay you the biggest compliment I’ve got in my repertoire.

Since I first heard Creep as an angsty 13-year-old in 1993, I’ve been a die-hard Radiohead fan. While others find them depressing and alienating, I have always found them consoling and warming. A steadfast companion through every trial I’ve faced as an adult. Seeing them live 2 days ago was like coming home.

The topic, the arc, the resolution, and profound sentiment and radical hope of this post remind me of Radiohead. It immerses my soul in love.

To a fellow Radiohead fan that is quite a compliment, thank you! They have a way of helping us find that certain kind of beauty and belonging in scary/alienating things. I hope they come here some day :)

Comments on this entry are closed.

{ 3 Trackbacks }